These are the largest returns - interesting to see these sites here, newspapers, stores, portals.

Many of the smallest sites are returning nothing, redirecting or under construction, actually quite a lot of the top performing pages.

But... maybe that's just because of the unknown user agent? (or the one with wget in it?)

Yes, very different picture. With just the regular user agents, just few page don't send a page back. Some redirect, but some are just very, very small, great job!

See Baidu in there? Now let's take another look at a few search giants. Google is amazing - that looks like the exact same page with a few lines in various languages. Interesting to see how much code each line contains though, that's huge compared to other sites.

And while the search giants likely have all kinds of fancy (tracking?) elements, some of the search alternatives have a few more lines, but much less actual code on the page.

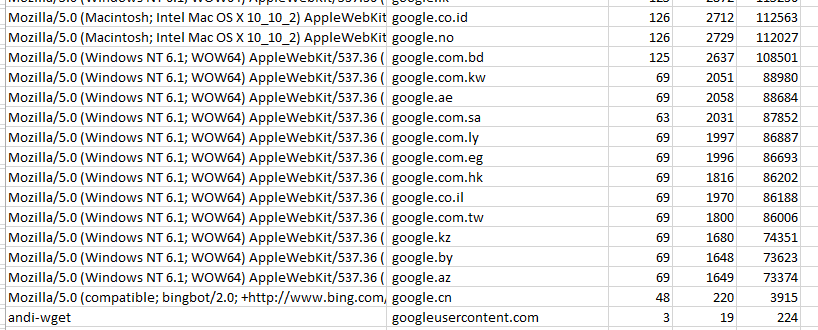

Filtering a bit further the confirmation no one likes my 'andi-wget' user agent. Means, future work with wget will need to have a different user agent nearly always!

Check out the first post with result on average responses sizes and how Google responds.